news 2020 3 min read

REALITY Inc partners with disguise xR to deliver 3DCG live concert for Japanese group WONK

Following the cancellation of its 2020 live tour, Japanese experimental soul group WONK turned to disguise xR to deliver its first 3DCG live concert this summer. We sat down with xR production team REALITY Inc to find out how it all came together.

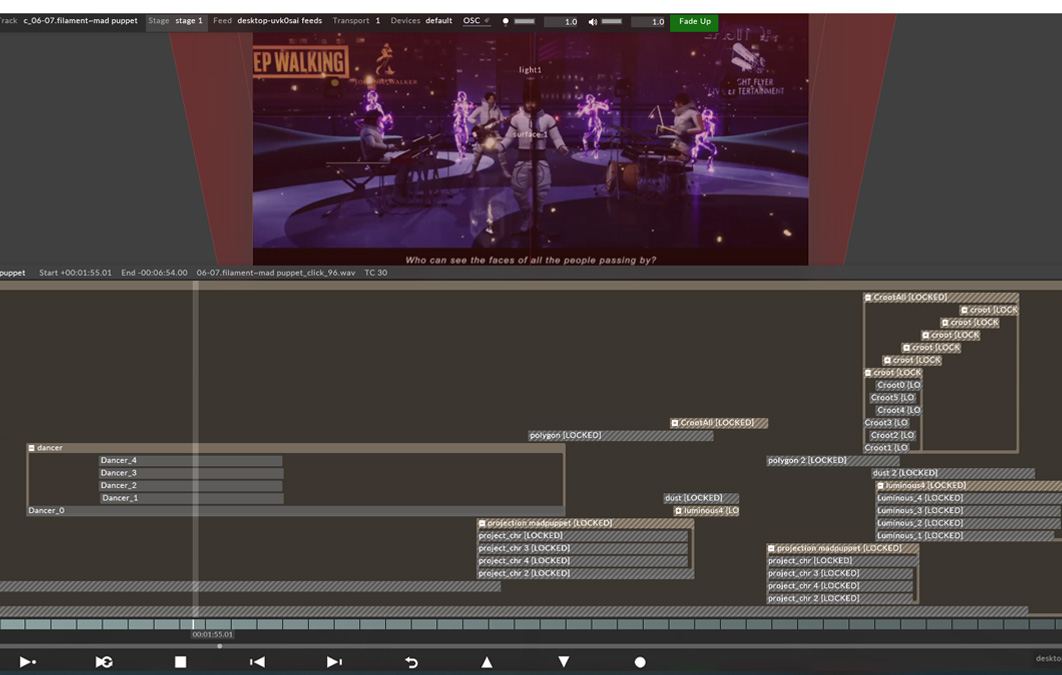

Tokyo-based REALITY Inc (formerly Wright Flyer Live Entertainment) are well known in the Japanese live production scene, using state-of-the-art technology to deliver unique live performances for artists. The team recently partnered with disguise to deliver a completely virtual setting for WONK's live performance, combining motion capture technology with Unreal Engine real-time content rendering and disguise xR to deliver a completely computer-generated show. We find out more about their ambitious project below:

What was the main goal of this project?

We wanted to recreate the worldview of WONK's album "EYES" live in 3D CG. The purpose of introducing disguise was to establish workload separation so that even staff who were not familiar with Unreal Engine could deliver the show in the same way as with real-world production equipment.

What were the main challenges you had to overcome to fulfil the brief?

A big challenge for us was portraying the use of musical instruments in the performance in a realistic way and implementing it live. There were dozens of "special effects", each with a specific meaning and linked to the lyrics and the tune of the song. It took some serious timing adjustments to make sure they didn't interfere with each other and to convey their meaning properly.

Did you have any concerns going into the project?

Our biggest concern was timing - do we have enough time to prepare, and will we be able to reach a quality that satisfies both the artist and the customer in the way we envision? Synchronisation and preparation flow were also a concern, as we needed to bring all the sound, video, lighting and special effects sections in-house, just like in a live show.

How did you overcome these challenges?

Through the hard work of our staff and the trial and error of working with the artists! By adding various parameters to the OSC multiple and controlling them with keyframes, severe timing and pleasant fades in and out are now possible.

How did partnering with disguise benefit the production workflow?

We were able to eliminate the need for a production timeline in Unreal Engine, and we were also able to establish workload separation that allowed staff who were not familiar with the game engine to edit the production on the disguise timeline. The concept of BPM in the timeline makes it possible to copy a layer created on a track to a different track, so the same production can be reused on a track with a different BPM, which greatly improves work efficiency.

How did disguise support the production?

With disguise Designer as the overall control tower, we assembled the entire sequence with the audio playback, VJ software, and Unreal Engine controlled by the OSC from disguise RenderStream. disguise supports various protocols including TC, DMX, MIDI and OSC and there is no other software that supports OSC multiples and automation. Various functions such as the set list and the section are designed to primarily work on site, where technical pressures often require instant response.

What made you proud of this project?

For us, being able to control a virtual live performance with a sequencer that controls a real live performance was a great step for the future.

Project credits:

Engineering: Takashi Yasukawa

Lighting Manipulation: Jun Izawa

Effect Manipulation: Makoto Chida & Kenta Iijima

disguise Operator: Jun Izawa