blog 2021 6 min read

Meet Orca: the Spanish-based trailblazers in virtual production

We spoke to VFX and Virtual Production Supervisor at Orca Studios, Adrian Pueyo, about their journey with disguise into virtual production and the cutting edge workflows that push them to the forefront of the industry.

If you thought LED virtual production technologies like those used in Star Wars: The Mandalorian was reserved just for Hollywood sets, think again.

Founded by top Spanish Producer Adrián Guerra and headed by veteran VFX expert Adrian Corsei, Orca Studios offers filmmakers state-of-the-art LED virtual production stages with full-service visual effects in Madrid, Barcelona and Las Palmas.

Powered by disguise, Orca’s cutting-edge virtual production pipeline has been used on a variety of film and TV productions as well as commercial shoots. Adrian Pueyo shares what makes Orca adapt to the future to lead innovation in visual effects.

What first attracted you to working at Orca Studios?

Before Orca, I was a VFX compositor working on features like “Captain Marvel” and “Star Wars – The Last Jedi”. I was really inspired to work at Orca because I knew that a big part of the company strategy was to be at the forefront of new film technologies. It has been fascinating to be so deeply involved in prototyping new tech for LED-based virtual production, on top of the VFX work I’ve always done.

I was also attracted to the fact that so much of the Orca team is distributed – we’re a truly modern, global team, working remotely anywhere in the world from Spain to the UK and Poland. The whole artist-facing side of our pipeline is also entirely in the cloud, and we have mirrored data sharing between different locations. That means if your machine is rendering or pulling up an asset, it’s all in the cloud and you can expand and contract the amount of processing power you have without spending more money on hardware.

Has learning virtual production been a challenge?

It’s been interesting! There’s no specific list of resources to learn virtual production – the technology is all so new. If you have a background in visual effects, however, it’s easier to transition to learn. Virtual production involves a lot of colour manipulation and understanding of photography and light. I feel it’s more similar to post-production than traditional production.

Access our training platform for brand new courses on virtual production

What advantages can Directors and DPs expect when working with Orca?

The big advantage we offer is choice. We are a high-end VFX studio that can deliver everything from pre-vis to final render fully in-house.

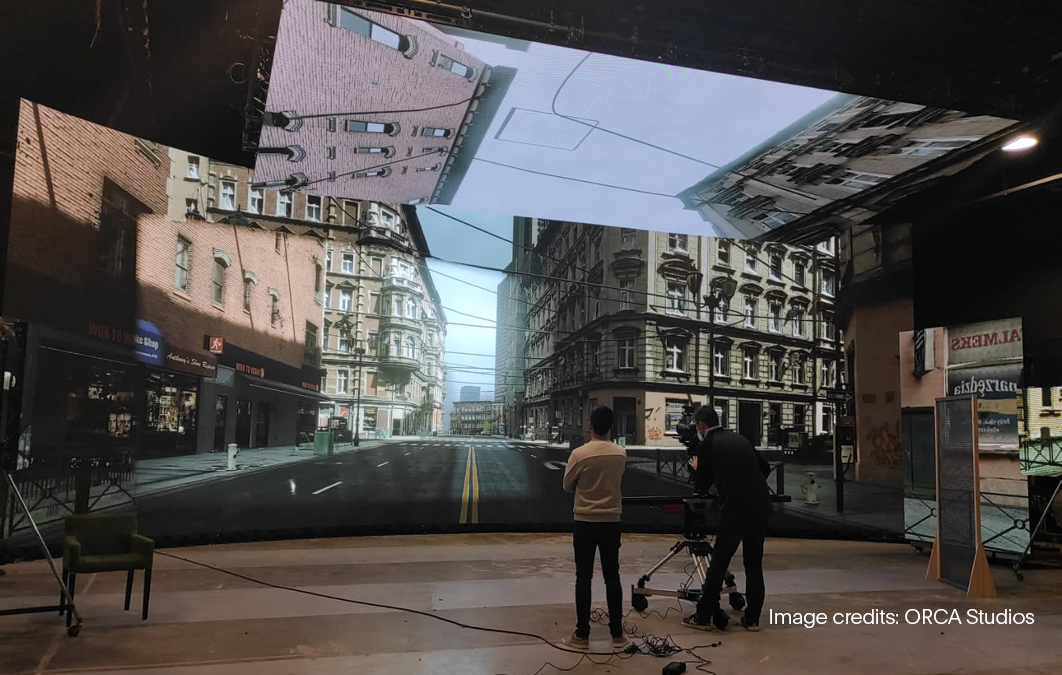

We also have cutting edge technology. We can use our LED virtual production stages for an all-in-one filmmaking approach that blurs the lines between pre-production, production and post.

In a typical project we first talk to the Director and DP about what they expect from a shot. Next, we will recommend the best approach. For shots where our LED virtual production stage would work best, the Orca team will generate backgrounds for the LEDs to display in real-time on set. These backgrounds replace traditional green screens and can help DPs capture final pixels, including VFX, directly in camera.

For DPs, this means you can do virtual pre-vis and scout for locations on set. You can have golden hours that last for days or create any weather you like, or film any location in the world without moving an inch. We can also perform live colour corrections or change the background live on set, and have that lighting accurately be reflected on your props and actors. No more green spill!

Of course, if any shots require more traditional post-production, we still have a professional VFX team here to help with that too. It’s a truly tailored approach.

Will your workflow reduce the cost of productions?

Our pipeline effectively combines virtual production and visual effects. It allows for most of the critical decisions to be taken during the first steps of pre-production. Because of that early decision-making, everyone can see the final creative vision on the LEDs during principal photography, so there are typically fewer expensive iterations in post.

This workflow can also save money on travel and on the number of people who need to be on set, which has been both efficient and safe during the Covid-19 pandemic.

What technologies do you use to create and enrich the virtual stage?

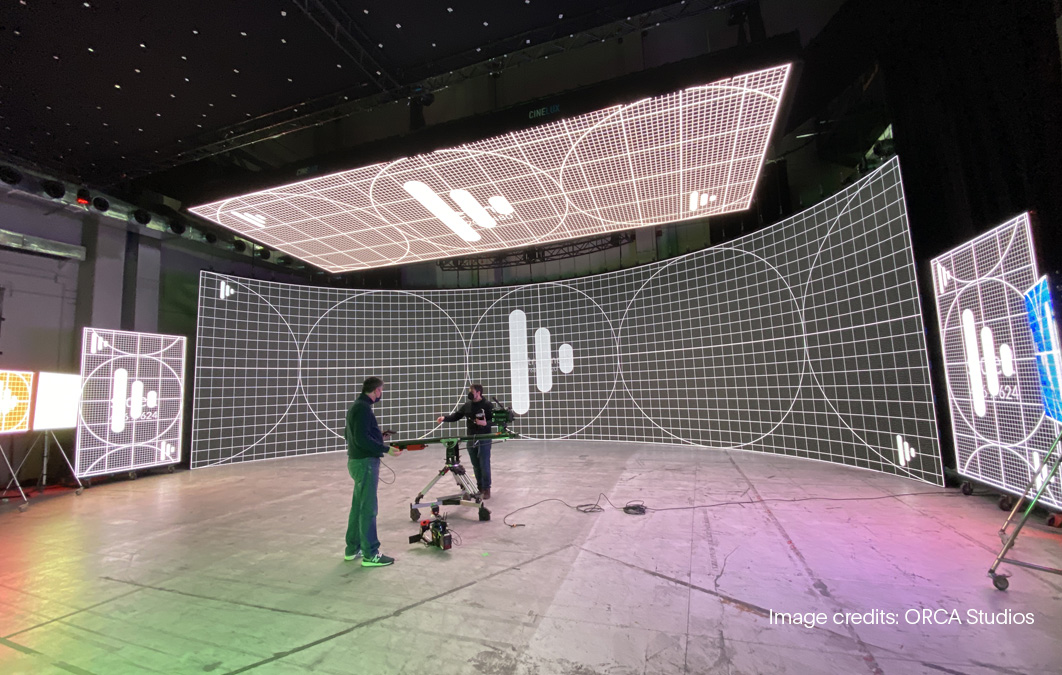

There are many technologies that feed into our workflow. At the heart of it all is the disguise software and hardware. We need a high-end solution to get involved in high-end virtual production projects.

We’ve been using disguise systems for a year now to map both the real-time Unreal Engine feed and the camera position – which is tracked using Mo-Sys StarTracker – through to the final live LED screen. This is all done with less than 0.3 seconds of latency thanks to disguise’s cluster rendering system, Unreal Engine and NVIDIA GPUs.

To capture real environments, we use a combination of techniques ranging from photogrammetry or 360°-photography to LiDAR scanning. We also create purely digital environments with Unreal Engine and other industry standard VFX software including Maya, Houdini or Nuke. We can handle working with many-million-polygon heavy scenes, so have the ability to display lots of detailed geometry on our LEDs, meaning less cleanup time for 3D captures and greater model richness.

What advice would you give a DP who is looking to work on a virtual production set?

Have an open mind. If you spend time exploring the possibilities of LED virtual production and playing with the lights and the visuals, you’ll get the best results. You just need to refocus the workload on pre-production rather than post. That’s why it’s important to spend a day in the virtual production volume before your shoot begins.

Another piece of advice is to not view LEDs and green screens as exclusive entities - you can combine them in the same project.. If there’s a shot where you would feel more comfortable having a traditional VFX key, we can ensure the green – or any other colour – is displayed on our LEDs, but just directly around the actors.

That means our post-production team can still key, but without any front spill or wrinkles that you’d get with a green screen. Also, the crew can still see and react to the rest of the backdrop and the 3D background is still stored for you to re-render if needed.

What do you most look forward to for the future of virtual production?

We’re looking forward to industry advances in rendering, particularly for ray tracing scenes in real-time that have minimal impact on performance. The new cluster rendering option in the disguise workflow is great for this, as every frame runs with much better quality.

Another thing we’re excited about are the advances in colour management. Handling all the conversions between the input and output sources is tough to do in LED-based virtual production, because different screens have different colour spectral responses. We’ve been working with disguise’s ACES integration for a while and it is now a core part of our colour management workflow.

We see so much potential in virtual production and to see so many of our clients also excited to explore that potential is what gets us out of bed in the morning.

Originally published in Spanish for Camera & Light Magazine.